Caching

For extreme load or denial of service attacks, it might be too expensive to call redis for every incoming request, just to find out it should be blocked because they have exceeded the limit. You can use an ephemeral in memory cache by passing some variable of typeMap<string, number> as the ephemeralCache option:

ephemeralCache will be initialized with new Map() if no value is provided

as the ephemeralCache parameter. To disable the cache, one must pass ephemeralCache: false.

If enabled, the ratelimiter will keep track of the blocked identifiers and their

reset timestamps. When a request is received with some identifier ip1 before the reset time of

ip1, the request will be denied without having to call Redis.

In serverless environments this is only possible if you create the cache or ratelimiter

instance outside of your handler function. While the function is still hot, the

ratelimiter can block requests without having to request data from Redis, thus

saving time and money.

See the section on how caching impacts the cost in the costs page.

Timeout

You can define an optional timeout in milliseconds, after which the request will be allowed to pass regardless of what the current limit is. This can be useful if you don’t want network issues to cause your application to reject requests.timeout is provided.

Analytics & Dashboard

Another feature of the rate limiting algorithm is to collect analytics. Bu default, analytics is disabled. To enable analytics, simply set theanalytics parameter to true:

ratelimit.limit(), analytics will be sent to the Redis database

(see costs page)

and information about the hour, identifier and the number of rate limit success and

failiures will be collected. This information can be viewed from the Upstash console.

If you are using rate limiting in Cloudflare Workers, Vercel Edge or a similar environment,

you need to make sure that the analytics request is delivered correctly to the Redis.

Otherwise, you may observe lower numbers than the actual number of calls.

To make sure that the request completes, you can use the pending field returned by

the limit method. See the

Asynchronous synchronization between databases

section to see how pending can be used.

Dashboard

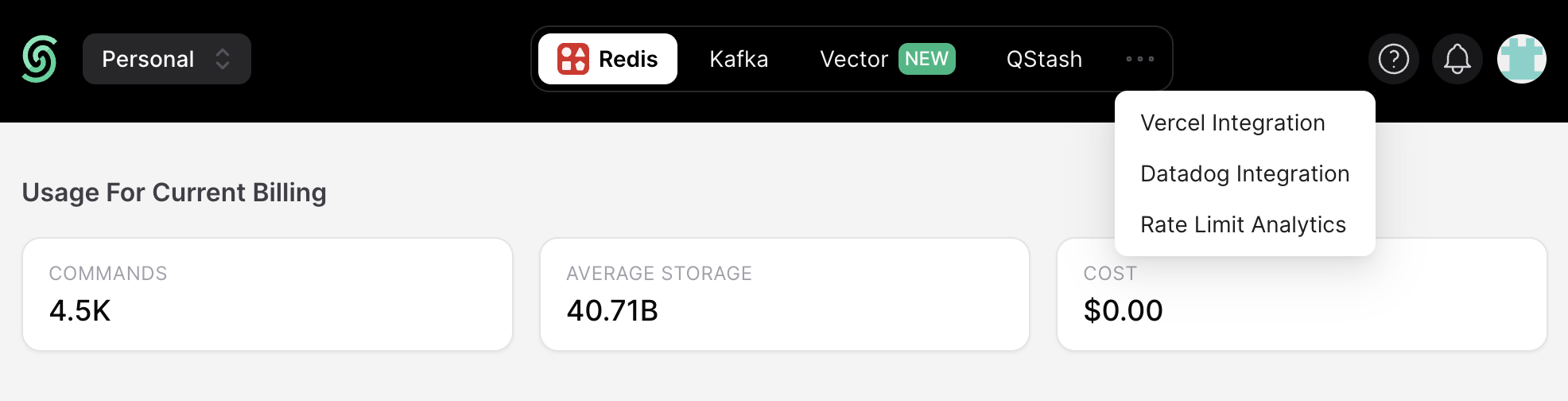

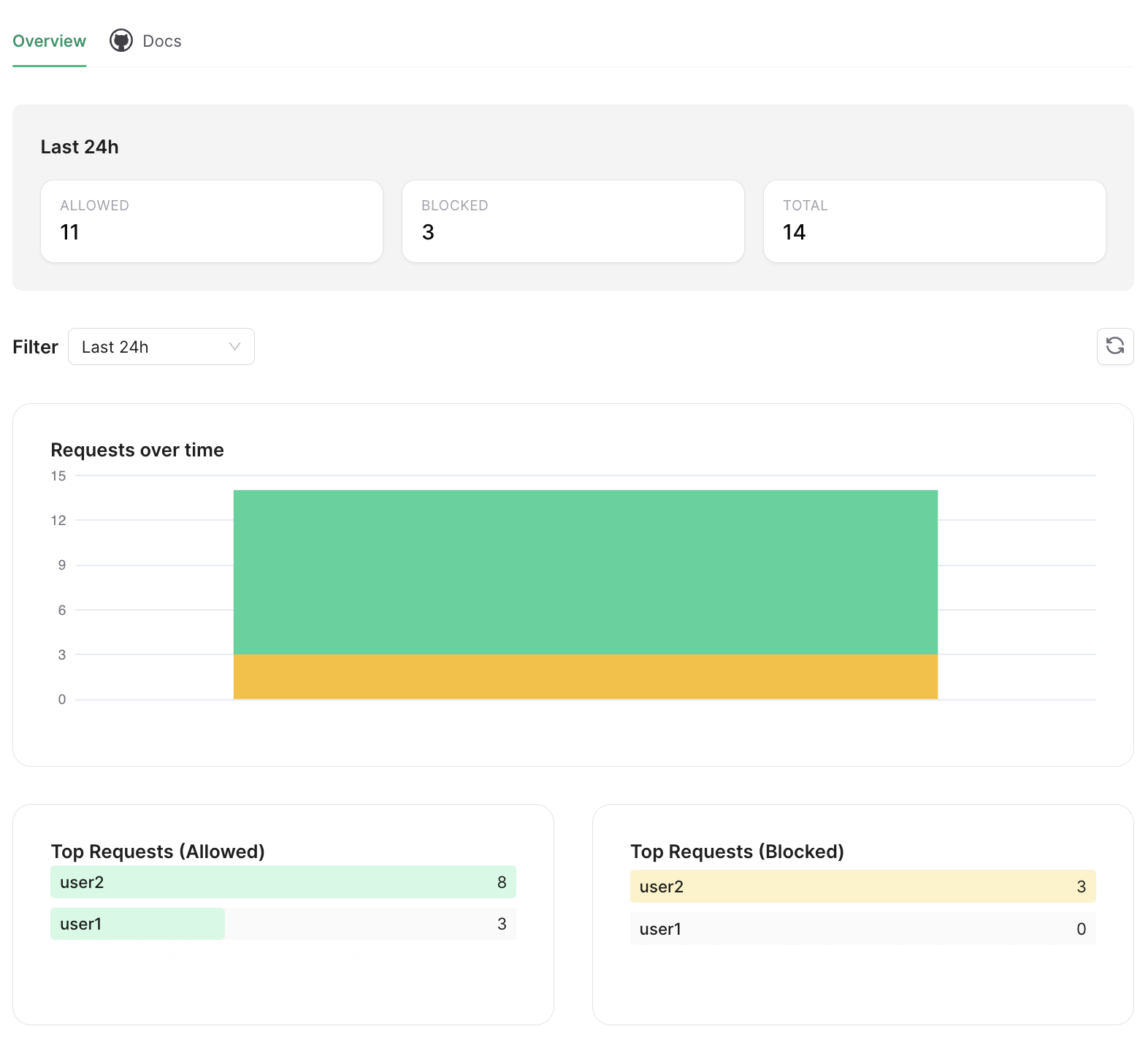

If the analytics is enabled, you can find information about how many requests were made with which identifiers and how many of the requests were blocked from the Rate Limit dashboard in Upstash Console. To find to the dashboard, simply click the three dots and choose the “Rate Limit Analytics” tab: In the dashboard, you can find information on how many requests were accepted, how many were blocked

and how many were received in total. Additionally, you can see requests over time, top allowed and

blocked requests.

In the dashboard, you can find information on how many requests were accepted, how many were blocked

and how many were received in total. Additionally, you can see requests over time, top allowed and

blocked requests.

If you are using a custom prefix, you need to use the same in the dashboard’s top right corner.

If you are using a custom prefix, you need to use the same in the dashboard’s top right corner.

Using Multiple Limits

Sometimes you might want to apply different limits to different users. For example you might want to allow 10 requests per 10 seconds for free users, but 60 requests per 10 seconds for paid users. Here’s how you could do that:Custom Rates

When we calllimit, it subtracts 1 from the number of calls/tokens available in

the timeframe by default. But there are use cases where we may want to subtract different

numbers depending on the request.

Consider a case where we receive some input from the user either alone or in batches.

If we want to rate limit based on the number of inputs the user can send, we need a way of

specifying what value to subtract.

This is possible thanks to the rate parameter. Simply call the limit method like the

following:

batchSize instead of 1.

Multi Region

Let’s assume you have customers in the US and Europe. In this case you can create 2 regional redis databases on Upstash and your users will enjoy the latency of whichever db is closest to them. Using a single redis instance has the downside of providing low latencies only to the part of your userbase closest to the deployed db. That’s why we also builtMultiRegionRatelimit which replicates the state across multiple redis

databases as well as offering lower latencies to more of your users.

MultiRegionRatelimit does this by checking the current limit in the closest db

and returning immediately. Only afterwards will the state be asynchronously

replicated to the other databases leveraging

CRDTs. Due

to the nature of distributed systems, there is no way to guarantee the set

ratelimit is not exceeded by a small margin. This is the tradeoff for reduced

global latency.

Usage

The api is the same, except for asking for multiple redis instances:Asynchronous synchronization between databases

The MultiRegion setup will do some synchronization between databases after returning the current limit. This can lead to problems on Cloudflare Workers and therefore Vercel Edge functions, because dangling promises must be taken care of:waitUntil documentation in Cloudflare and Vercel for more details.